Runtime, not just a library

Contenox was a complete runtime environment – with API gateway, background workers, storage, and observability – not just a collection of helper functions.

Archived runtime project for sovereign GenAI applications

Contenox was a self-hostable runtime for deterministic, chat-native AI applications. It orchestrated language models, tools, and business logic without giving up control over data, routing, or behaviour.

The Contenox venture has been shut down. The kernel remains available as open-source software and can be used as a reference implementation or for experiments in AI workflow runtimes.

Contenox is no longer under active development. This page remains as documentation and context for engineers, analysts, and curious people exploring the ideas and architecture behind the project.

A short walkthrough of the chat interface, state-machine workflows, and observability tools. The demo reflects the state of the project before it was sunset.

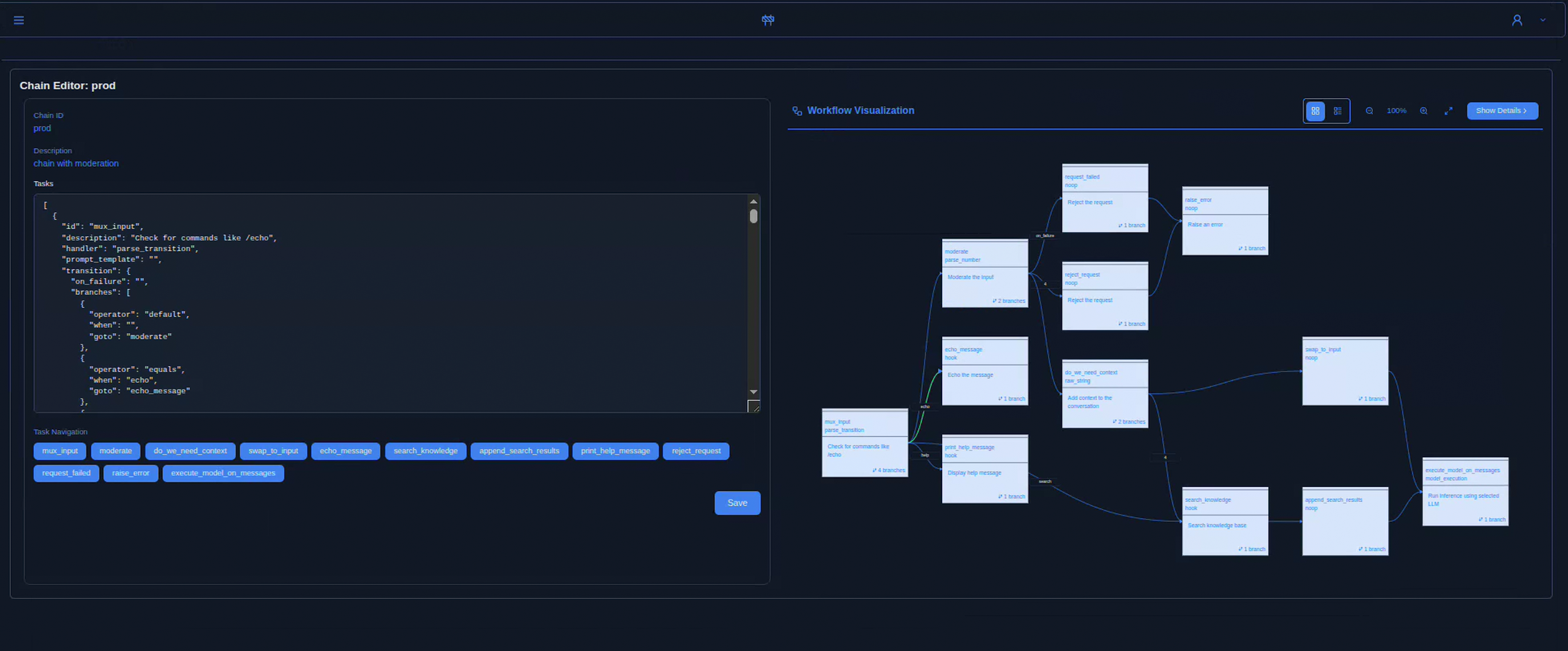

The Contenox UI combines a chat client with a visual workflow inspector and execution traces – so you can see exactly which states, tools, and hooks were involved in each conversation.

Contenox was a self-hostable runtime for building and operating enterprise-grade AI agents, semantic search systems, and chat-driven applications. It modelled AI behaviour as explicit, observable state machines instead of opaque prompt chains.

Contenox was a complete runtime environment – with API gateway, background workers, storage, and observability – not just a collection of helper functions.

Workflows were explicit graphs of tasks, handlers, and transitions. Every step was visible, controllable, and replayable.

Users interacted via chat, but under the hood each message drove a deterministic workflow – from RAG queries to tool calls and side effects.

It could run on-prem, in your cloud, or air-gapped. Requests could be routed across OpenAI, Ollama, vLLM, or custom backends without lock-in.

Even though the Contenox venture has ended, the core thesis remains relevant: modern AI systems drift into opacity. Runtimes that make logic, data flow, and side effects explicit help teams reason about behaviour instead of guessing.

The runtime was designed so teams could own data, execution, and decision boundaries – with no hidden SaaS layer that might change behaviour unexpectedly.

Workflows were deterministic state machines: tasks, transitions, retries, and human approvals were defined explicitly instead of being buried in prompts.

Every state change, model call, and hook invocation was logged and traceable – a foundation for auditing, governance, and regulation.

During its active lifetime, Contenox complemented APIs like OpenAI’s tools or MCP. It was most useful once single AI calls turned into real workflows.

Contenox executed AI workflows as explicit state machines. Every step – LLM call, data fetch, or external action – was a task with defined inputs, outputs, and transitions.

Agents were extended with hooks – remote services that integrated external APIs, databases, or tools. Any OpenAPI-compatible endpoint could be registered and turned into callable tools for models.

Workflows were defined in YAML or via API. Each execution was logged and traceable: transitions, model calls, hook results, and intermediate state were all visible.

Built-in RAG pipeline with document ingestion, embeddings, and vector search (e.g. via Vald) enabled agents to answer based on your data.

Requests could be routed across Ollama, vLLM, OpenAI, or other providers, with configurable fallbacks and policies per task.

Contenox was built as a modular, container-first system. The runtime could be embedded into existing stacks or deployed as its own AI infrastructure layer.

Contenox was not a black-box SaaS, but software you could run and adapt – a runtime that sat between LLMs and real systems.

The core kernel of Contenox remains open-sourced at github.com/contenox/runtime .

A preliminary OpenAPI specification for the runtime API is available as JSON and YAML. It reflects the last public state of the project.

The Contenox venture has been shut down. There is no hosted product, no commercial offering, and the code is not actively maintained. Issues and pull requests on GitHub may only be reviewed occasionally.

For occasional questions or historical context about the project, you can still reach out at hello@contenox.com, but replies cannot be guaranteed.